Installation steps

This guide describes the installation of the RPM packages using yum.

This installation guide is intended to be used to install SKOOR on a fresh system. To upgrade an existing SKOOR system, please use the upgrade guide here.

Prerequisites

Disable SELinux

Before installing SKOOR Engine, SELINUX must be disabled. Display SELinux status:

sestatus

Check if mode is enforcing:

SELinux status: enabled ... Current mode: enforcing

If so, change it at least to permissive or disable it by changing the SELinux configuration:

vi /etc/selinux/config

Change configuration to permissive or disabled:

SELINUX=disabled

Activate configuration and verify with sestatus:

setenforce 0

Allow cron for users

If cron is restricted, the users needed by the SKOOR software must be allowed cron access by adding them to the following file:

/etc/cron.allow

Users:

postgres eranger reranger

SKOOR repository GPG fingerprints:

RPM-GPG-KEY-SKOOR:

0C18 95B8 11D4 71E5 D043 EFA3 69E1 147C 2CB4 0F3A

RPM-GPG-KEY-PGDG:

D4BF 08AE 67A0 B4C7 A1DB CCD2 40BC A2B4 08B4 0D20

RPM-GPG-KEY-TIMESCALEDB:

1005 fb68 604c e9b8 f687 9cf7 59f1 8edf 47f2 4417

SKOOR Engine Installation (Single server)

For Systems with database replication, two separate servers must be installed as described in this section. See Database Replication Guide for further configuration steps

PostgreSQL Installation

PostgreSQL 13 is the database server needed by the SKOOR Engine. It must be installed before the SKOOR software:

Install the PostgreSQL server using the SKOOR PostgreSQL installer package:

dnf install eranger-postgresql

This package performs the following steps:

Installs

postgresql13-server,timescaledb-2-postgresql-13,eranger-database-utilsthrough its dependencies.Initializes a database cluster using

initdbTunes

postgresql.conffor TimescaleDBInitializes a database (plain database, no schema nor data) that can be used by the SKOOR Server

Opens TCP port 5432 on the firewall

PgBouncer Installation

PgBouncer is used as a connection pool and sits between SKOOR and PostgreSQL Server.

Install PgBouncer using the SKOOR PgBouncer installer package:

dnf install eranger-pgbouncer

This package performs the following steps:

Install

pgbouncerthrough its dependencies.Reconfigures PostgreSQL (postgresql.conf)

listen_addresses = 'localhost'only listen for local connectionsunix_socket_directories = '/var/run/postgresql-backend'do not use default PostgreSQL UNIX socketmax_connections = 300allow 300 connections

Configures PgPool

listen_addr = *listen_port = 5432unix_socket_dir = /var/run/postgresqlmax_client_conn = 300default_pool_size = 60

Standard Server Installation

For customers requiring only the base set of software (i.e. most customers), run the following command to install the required packages:

dnf install eranger-server

Check the installed SKOOR Engine packages:

dnf list installed |grep eranger

Expected output:

eranger-agent.x86_64 <version> eranger-auth.x86_64 <version> eranger-collector.x86_64 <version> eranger-collector-eem.x86_64 <version> eranger-collector-mail.x86_64 <version> eranger-common.x86_64 <version> eranger-database-utils.x86_64 <version> eranger-doc.x86_64 <version> eranger-nodejs.x86_64 <version> eranger-postgresql.x86_64 <version> eranger-pymodules.x86_64 <version> eranger-report.x86_64 <version> eranger-server.x86_64 <version> eranger-syncfs.x86_64 <version> eranger-ui.x86_64 <version>

SKOOR < 8.1 installs Google Chrome as a dependency. Google Chrome installs a YUM repository to update itself to newer versions . But since SKOOR needs a very specific version, we need to disable this newly created repo. SKOOR >= 8.1 doesn’t require Google Chrome anymore and hence the repo is not installed either.

sed -i 's/enabled=1/enabled=0/g' /etc/yum.repos.d/google-chrome.repo

SKOOR Engine Collector Installation

Since the Release 5.5 are two options for an external collector, full installation and basic installation. The basic installation comes with a limited feature-set and less dependencies and can later be extended to a full installation with additional packages.

Full Skoor Engine Collector installation:

To install an external SKOOR Engine Collector use the following command for the full installation:

dnf install eranger-collector eranger-collector-eem eranger-collector-mail

Basic Skoor Engine Collector Installation:

To install a basic external SKOOR Engine Collector use the following command:

dnf install eranger-collector

Additional packages for the minimal Skoor Engine Collector:

To enable EEM Jobs on the external collector you can install the plugin with the following command:

dnf install eranger-collector-eem

To enable Mail Jobs (EWS, IMAP, POP3 or SMTP) on the external collector you can install the plugin with the following command:

dnf install eranger-collector-mail

Communication to SKOOR Engine

After installing a new external collector, the communication to the SKOOR Engine must be configured. The following section shows different possibilities to achieve this.

General

For the SKOOR Engine, a collector is a special kind of user. So every external collector must be configured in the UI. Create a locally authenticated user with the role Collector in /root /Users /Users and set a password.

The further configuration is done in the collectors configuration file on the collector server:

/etc/opt/eranger/eranger-collector.cfg

First, the server_id of the SKOOR Engine must be identified. On the SKOOR Engine host, open the eranger-server.cfg:

/etc/opt/eranger/eranger-server.cfg

Find the parameter server_id in the configuration (if commented as in the following example, the id is 1):

#server_id = 1

If an external collector delivers measurements to more than one SKOOR Engine, the server_id parameter must be different on every SKOOR Engine.

TCP

This is the standard way collectors communicate with the SKOOR Engine. The communication is not encrypted.

The following parameters must be configured in eranger-collector.cfg:

Set the server1_id parameter to the value configured on the SKOOR Engine server as server_id (default is 1)

Set the server1_address parameter to the SKOOR Engine hostname or IP address (unix sockets are used only for local collectors only)

Set server1_user and server1_passwd as configured in the SKOOR Engine

server1_id = 1 server1_address = 10.10.10.10 #server1_port = 50001 #server1_domain = server1_user = collector-user server1_passwd = collector-password #server<server_id>_fetch_parse_dir (server_id instead of index)! #server1_fetch_parse_dir= /var/opt/run/eranger/collector/tmp

HTTP/HTTPS

Communication using HTTP with no encryption is discouraged. If required for some reason, the httpd server on the SKOOR Engine must be configured to allow this kind of communication first.

Collectors using HTTP(S) for communication can not be switched automatically when performing a failover in a primary/standby setup

The following section describes how to set up encrypted communication using HTTPS. For encryption, the root CA certificate used by the SKOOR Engine server must be copied to the collector system. Standard Linux paths can be used to place it in the filesystem.

Configure the required parameters in eranger-collector.cfg:

Set the server1_id parameter to the value configured on the SKOOR Engine server as server_id (default is 1)

Set the server1_address parameter to the SKOOR Engine hostname or IP address in the form of a URL as shown below

Make sure the server1_port parameter is commented, it will break the communication otherwise

Set server1_user and server1_passwd as configured in the SKOOR Engine

Configure either server1_ssl_cacert or server1_ssl_capath to the location the certificate was copied before

server1_id = 1 server1_address = https://10.10.10.10/skoor-collector #server1_port = 50001 #server1_domain = server1_user = collector-user server1_passwd = collector-password #server<server_id>_fetch_parse_dir (server_id instead of index)! #server1_fetch_parse_dir= /var/opt/run/eranger/collector/tmp server1_ssl_cacert = /etc/pki/tls/certs/rootCA.pem

HTTPS with client authentication

Client authentication must be enabled on the SKOOR Engine first. Open the respective web server configuration file for this purpose:

/etc/httpd/conf.d/skoor-engine-over-http.conf

Uncomment the SSLVerifyClient directive:

<Location "/skoor-collector">

ExpiresActive On

ExpiresDefault "now"

ProxyPass http://localhost:50080 retry=0 connectiontimeout=15 timeout=30

SSLVerifyClient require

</Location>

Reload httpd:

systemctl reload httpd

Name | Description |

|---|---|

server1_ssl_cacert | Full path to the root CA certificate, the collector will use this specific file |

server1_ssl_capath | Path to a directory containing the root CA certificate, the collector will search for the correct certificate |

server1_ssl_verify_peer | If set to true (default), the SKOOR Engine server's certificate is verified |

server1_ssl_verify_host | If set to true (default), the hostname of the SKOOR Engine server is verified |

server1_ssl_cert_client_public_key | The public key used for client authentication (collector's certificate) |

server1_ssl_cert_client_private_key | The private key used for client authentication (collector's private key) |

server1_ssl_cert_client_private_key_passwd | Password to read the collector's private key if set |

SKOOR Engine license

Obtain a valid license from SKOOR and add the necessary lines to the file:

/etc/opt/eranger/eranger-server.cfg

Example with a license of 1000 devices:

license_name = Example customer license_feature_set = 3.1 license_devices= 1000 license_key = xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

xxxx… represents the actual license key. Make sure the actual key is inserted in a single line with no newline characters in-between.

SKOOR Engine configuration

Expand the PATH Variable for root:

~/.bashrc

Add:

PATH=$PATH:/opt/eranger/bin

Then apply the changes and run eRanger.sh start to start SKOOR Engine services:

. ~/.bashrc

eRanger.sh start

=========================================================================== Choose command eRanger Version 5.0.0 Command - Action - Object - Current State =========================================================================== 1 - start - eRanger Server - started 2 - start - eRanger Collector - started 3 - start - eRanger Report - started 4 - start - eRanger Agent - started a - start - all above 9 - start - eRanger Webservice - started 11 - start - PostgreSQL - started 12 - start - Rsyslog - started 13 - start - Trapd - stopped 14 - start - Httpd - started 15 - start - Smsd - stopped 16 - start - Postfix - started r - Switch to restart mode S - Switch to stop mode c - Current eRanger status 0 - do_exit program Enter selection:

The Webservice, IC Alerter and Ethd are only listed if the corresponding package is installed.

Enter a to start all required services. Then exit with 0.

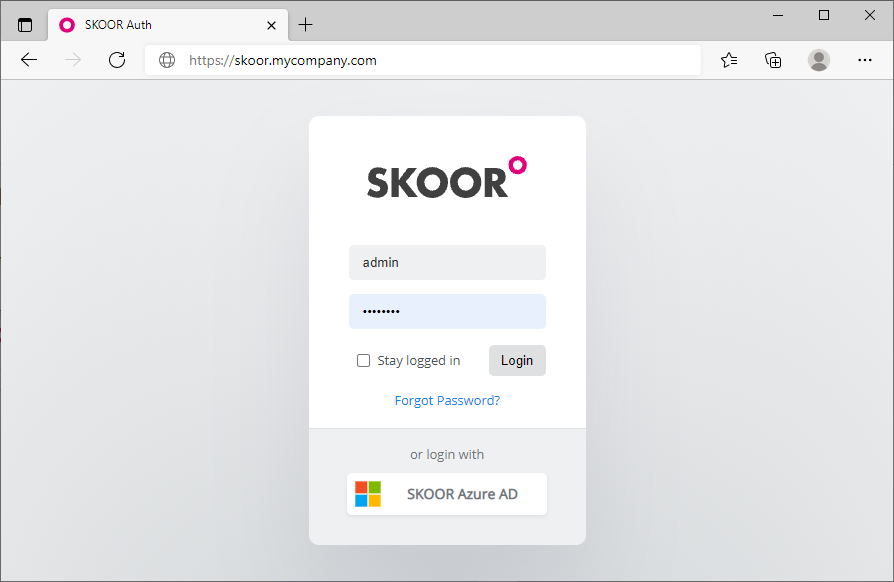

SKOOR Engine login

Now one should be able to login to SKOOR Engine using a web browser and entering SKOOR Engine’s FQDN or IP address in the address bar:

The default login credentials are:

Username | admin |

|---|---|

Password | admin |

SSL certificate installation

Following a default installation, the browser will complain about untrusted SSL security certificates. Either accept the default self-signed certificate or generate and install a custom SSL certificate.

By default the certificates are located under:

/etc/pki/tls/certs/

The configuration file

/etc/httpd/conf.d/ssl.conf

must be adjusted to point to the correct certificates. An example entry looks like this:

SSLCertificateFile /etc/pki/tls/certs/wildcard_mycompany.ch.crt SSLCertificateKeyFile /etc/pki/tls/private/private-key_mycompany.ch.key

Restart Apache webserver with the command:

systemctl restart httpd

Disble https rewrite

When calling http://skoor.company.com, the webserver will rewrite the address to use https instead of http. If only http is to be used, disable the rewriting rule in the configuration file:

/etc/httpd/conf.d/eranger.conf

Comment the following three lines (putting a hash sign at the beginning):

# RewriteEngine On

# RewriteCond %{HTTPS} !=on

# RewriteRule ^/?(.*) https://%{SERVER_NAME}/$1 [R,L]

Restart Apache webserver with the command:

systemctl restart httpd

Optimizing SKOOR on virtual environments

If running SKOOR Engine or SKOOR collector inside a virtual machine, the IO scheduler needs to be adapted for performance reasons. By default, Red Hat < 7 installs with the cfq IO scheduler. The recommended IO scheduler for Red Hat systems running in a virtual machine is the noop scheduler on Red Hat 7 and the none scheduler on Red Hat 8 / 9.

For Red Hat 7 systems

Run the following commands to enable the noop scheduler for a running system for the sda block device which usually corresponds to the first disk:

# echo noop > /sys/block/sda/queue/scheduler # cat /sys/block/sda/queue/scheduler [noop] anticipatory deadline cfq

The noop scheduler is now marked as the current scheduler. Run this command for each of the virtual disks configured for the system (replace sda with the name of the virtual disk).

However, the above setting will not persist across reboots. Use the following guide to enable the noop scheduler persistently.

Set noop globally for all devices by editing the file /etc/default/grub as shown below and then rebuilding the grub2 configuration file:

# vi /etc/default/grub ... GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=rhel00/root rd.lvm.lv=rhel00/swap" (before) GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=rhel00/root rd.lvm.lv=rhel00/swap elevator=noop" (after) ... # grub2-mkconfig -o /boot/grub2/grub.cfg (on BIOS-based machines) # grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg (on UEFI-based machines)

For Red Hat 8 / 9 systems

Run the following commands to enable the noop scheduler for a running system for the sda block device which usually corresponds to the first disk:

# echo none > /sys/block/sda/queue/scheduler # cat /sys/block/sda/queue/scheduler [none] mq-deadline kyber bfq

The none scheduler is now marked as the current scheduler. Run this command for each of the virtual disks configured for the system (replace sda with the name of the virtual disk).

However, the above setting will not persist across reboots. Use the following guide to enable the none scheduler persistently.

Set none globally for all devices by editing the file /etc/default/grub as shown below and then rebuilding the grub2 configuration file:

# vi /etc/default/grub ... GRUB_CMDLINE_LINUX="crashkernel=auto resume=/dev/mapper/rh9-swap rd.lvm.lv=rh9/root rd.lvm.lv=rh9/swap" (before) GRUB_CMDLINE_LINUX="crashkernel=auto resume=/dev/mapper/rh9-swap rd.lvm.lv=rh9/root rd.lvm.lv=rh9/swap elevator=none" (after) ... # grub2-mkconfig -o /boot/grub2/grub.cfg (on BIOS-based machines) # grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg (on UEFI-based machines)

Creating a yum repository

In case remote collector hosts do not have access to any Red Hat repositories but the SKOOR Engine does, it may help to create a simple software repository on the server which holds all RPM files used for installation via yum. The repository will be available to the collector hosts via Port 443 which is an open port already. Here are the steps required to set up such a repository and access it from a remote collector host:

Create the repository root directory and copy all required RPM files:

# cd /srv/eranger/html # mkdir repo # cp /path/to/*.rpm repo/ # yum install createrepo # createrepo ./repo

Replace /path/to/ above with the path where the required RPM files have been copied on the SKOOR Engine host. This will create a new subdirectory named repodata inside the repo directory.

Now add the repository as a package installation source on remote hosts (e.g. a SKOOR Engine collector host):

# vi /etc/yum.repos.d/SKOOR.repo

[SKOOR] name=SKOOR baseurl=https://<ip or hostname of repository>/repo/ sslverify=false gpgcheck=0 enabled=1

Check access to the newly added repo from the remote host:

# yum clean all # yum repolist

Now the remote host can install software using the SKOOR repository as package source.

Adding a DVD or CD as a repository

To install software from an inserted RedHat DVD or CD drive, add a new repository by creating the following file:

vi /etc/yum.repos.d/RHEL_6.5_DVD.repo

[RHEL_6.5_DVD] name=RHEL_6.5_DVD baseurl="file:///cdrom/" gpgcheck=0